プログラミングのない世界 (4)#

手書き文字認識#

from sklearn import datasets

digits = datasets.load_digits()

digits.images.shape

(1797, 8, 8)

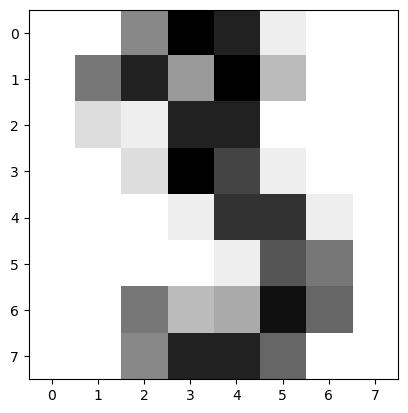

digits.images[3]

array([[ 0., 0., 7., 15., 13., 1., 0., 0.],

[ 0., 8., 13., 6., 15., 4., 0., 0.],

[ 0., 2., 1., 13., 13., 0., 0., 0.],

[ 0., 0., 2., 15., 11., 1., 0., 0.],

[ 0., 0., 0., 1., 12., 12., 1., 0.],

[ 0., 0., 0., 0., 1., 10., 8., 0.],

[ 0., 0., 8., 4., 5., 14., 9., 0.],

[ 0., 0., 7., 13., 13., 9., 0., 0.]])

fig = plt.figure()

ax = fig.add_subplot()

ax.imshow(digits.images[3], cmap=plt.cm.gray_r)

plt.show()

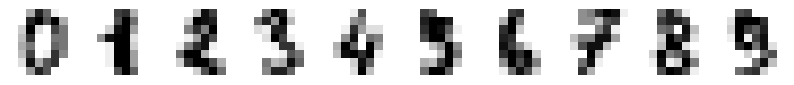

_, axes = plt.subplots(nrows=1, ncols=10, figsize=(10, 3))

for ax, image, label in zip(axes, digits.images, digits.target):

ax.set_axis_off()

ax.imshow(image, cmap=plt.cm.gray_r, interpolation="nearest")

#ax.set_title("Training: %i" % label)

より大きなデータセットを使う

from sklearn.datasets import fetch_openml

X, y = fetch_openml("mnist_784", version=1, return_X_y=True, as_frame=False)

X.shape, y.shape

((70000, 784), (70000,))

np.sqrt(784)

28.0

X[3].reshape((28,28))

array([[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 124, 253, 255, 63, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 96, 244, 251, 253, 62, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 127, 251, 251, 253, 62, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 68, 236, 251, 211, 31, 8, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 60, 228, 251, 251, 94, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 155, 253, 253, 189, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 20, 253, 251, 235, 66, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

32, 205, 253, 251, 126, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

104, 251, 253, 184, 15, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 80,

240, 251, 193, 23, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 32, 253,

253, 253, 159, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 151, 251,

251, 251, 39, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 48, 221, 251,

251, 172, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 234, 251, 251,

196, 12, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 253, 251, 251,

89, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 159, 255, 253, 253,

31, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 48, 228, 253, 247, 140,

8, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 64, 251, 253, 220, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 64, 251, 253, 220, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 24, 193, 253, 220, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0]])

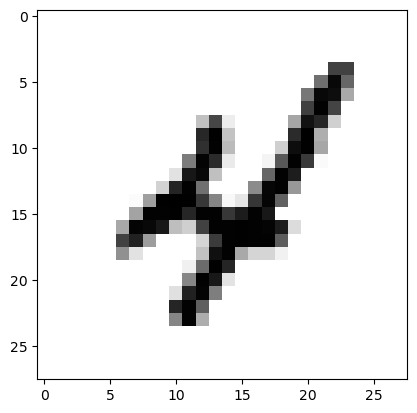

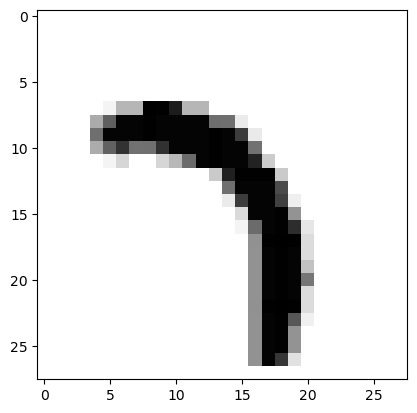

fig = plt.figure()

ax = fig.add_subplot()

ax.imshow(X[9].reshape((28,28)), cmap=plt.cm.gray_r)

plt.show()

y[9]

'4'

X = X / 255.0

X[3].reshape((28,28))

array([[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.48627451, 0.99215686,

1. , 0.24705882, 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.37647059, 0.95686275, 0.98431373,

0.99215686, 0.24313725, 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.49803922, 0.98431373, 0.98431373,

0.99215686, 0.24313725, 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.26666667, 0.9254902 , 0.98431373, 0.82745098,

0.12156863, 0.03137255, 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.23529412, 0.89411765, 0.98431373, 0.98431373, 0.36862745,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.60784314, 0.99215686, 0.99215686, 0.74117647, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.07843137,

0.99215686, 0.98431373, 0.92156863, 0.25882353, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.1254902 , 0.80392157,

0.99215686, 0.98431373, 0.49411765, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.40784314, 0.98431373,

0.99215686, 0.72156863, 0.05882353, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.31372549, 0.94117647, 0.98431373,

0.75686275, 0.09019608, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.1254902 , 0.99215686, 0.99215686, 0.99215686,

0.62352941, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.59215686, 0.98431373, 0.98431373, 0.98431373,

0.15294118, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.18823529, 0.86666667, 0.98431373, 0.98431373, 0.6745098 ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.91764706, 0.98431373, 0.98431373, 0.76862745, 0.04705882,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.99215686, 0.98431373, 0.98431373, 0.34901961, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.62352941,

1. , 0.99215686, 0.99215686, 0.12156863, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.18823529, 0.89411765,

0.99215686, 0.96862745, 0.54901961, 0.03137255, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.25098039, 0.98431373,

0.99215686, 0.8627451 , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.25098039, 0.98431373,

0.99215686, 0.8627451 , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.09411765, 0.75686275,

0.99215686, 0.8627451 , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ],

[0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ]])

訓練 (train) 用と評価 (test) 用のデータセットに分割する:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0, test_size=0.7)

list(map(np.shape,(X_train,X_test,y_train,y_test)))

[(21000, 784), (49000, 784), (21000,), (49000,)]

mlp = MLPClassifier(

hidden_layer_sizes=(40,),

max_iter=8,

alpha=1e-4,

solver="sgd",

verbose=10,

random_state=1,

learning_rate_init=0.2,

)

import warnings

from sklearn.exceptions import ConvergenceWarning

with warnings.catch_warnings():

warnings.filterwarnings("ignore", category=ConvergenceWarning, module="sklearn")

mlp.fit(X_train, y_train)

Iteration 1, loss = 0.44139186

Iteration 2, loss = 0.19174891

Iteration 3, loss = 0.13983521

Iteration 4, loss = 0.11378556

Iteration 5, loss = 0.09443967

Iteration 6, loss = 0.07846529

Iteration 7, loss = 0.06506307

Iteration 8, loss = 0.05534985

print("Training set score: %f" % mlp.score(X_train, y_train))

print("Test set score: %f" % mlp.score(X_test, y_test))

Training set score: 0.986429

Test set score: 0.953061

mlp.predict([X_test[9]]), y_test[9]

(array(['7'], dtype='<U1'), '7')

fig = plt.figure()

ax = fig.add_subplot()

ax.imshow(X_test[9].reshape((28,28)), cmap=plt.cm.gray_r)

plt.show()

機械学習の結果、各層には次に可視化するような重みが掛かるようになっている:

mlp.coefs_[0].shape

(784, 40)

fig, axes = plt.subplots(5, 8, figsize=(14,14))

# use global min / max to ensure all weights are shown on the same scale

vmin, vmax = mlp.coefs_[0].min(), mlp.coefs_[0].max()

for coef, ax in zip(mlp.coefs_[0].T, axes.ravel()):

ax.matshow(coef.reshape(28, 28), cmap=plt.cm.gray, vmin=0.5 * vmin, vmax=0.5 * vmax)

ax.set_xticks(())

ax.set_yticks(())

ax.set_aspect('equal')

plt.tight_layout()

#plt.subplots_adjust(hspace=None)

plt.show()